Globally, scams are one of the most prominent trends in financial crime in recent months and will inevitably follow a process of sophistication and consolidation in the coming years.

The scam, an ever-changing but at the same time old and familiar attack vector, is advancing in complexity and effectiveness. The profitability from these attacks is attracting the attention of an increasing number of fraudsters and criminal organizations, with its consequent impact being felt the hardest by financial institutions and their customers.

In a “natural” way, old fraud techniques and new artificial intelligence capabilities are converging, substantially increasing their effectiveness and the number of potential victims that can be caught up in a scam.

This reality is forcing the industry to modify its approach to fraud prevention. Financial institutions, their customers, regulators and even the justice system, have to adapt to new scenarios that have not existed until now, but are being seen more and more frequently.

One of the most fashionable topics in recent months, due to the revolution it represents in the way that we relate to technology and the progress across parts of our society, is the advancement of Artificial Intelligence (AI) and the infinite possibilities it offers us in many areas of our lives.

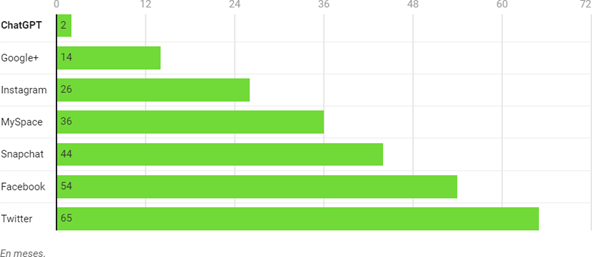

OpenAI is already the fastest growing company in terms of user volume in the history of the Internet during its first months of operation:

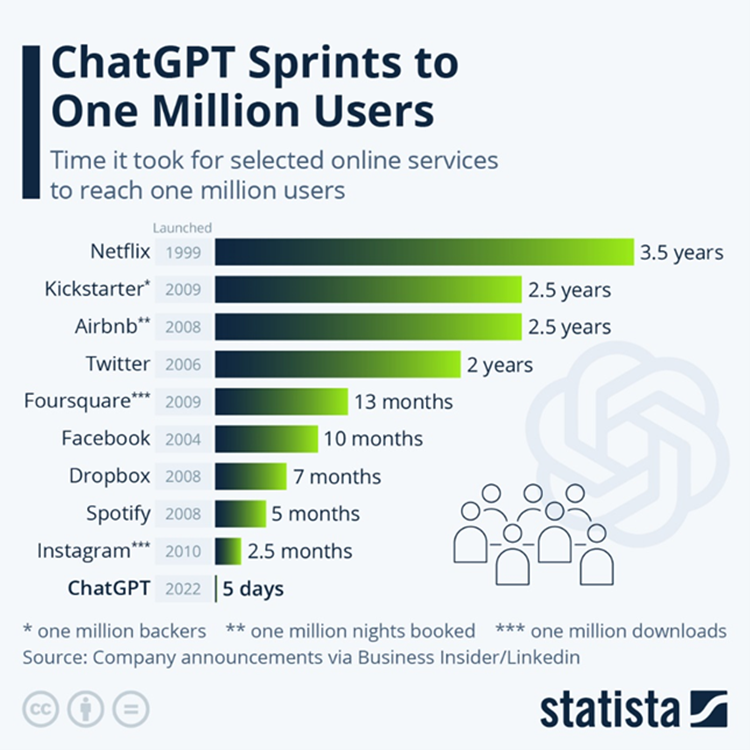

It reached 1 million users in 5 days:

And it reached 100 million users in just 2 months:

And although OpenAI is currently the most visible face of AI with its famous applications both in its trained language model “ChatGPT” with its newly released GPT-4 version that offers even more powerful functionalities and in its image generator from text “Dall-e 2”, we are facing an exponential increase in the possibilities that AI offers us and the options that anyone with Internet access has to exploit what this technology can offer.

Utilities such as automatic generation of web pages from a description of the content, improvement of the quality of photos, videos or audios, voice recognition or generation of programming code, are just some examples of the countless applications that AI offers us.

In addition, there are other utilities such as the artificial generation of voice and video from samples (deep fake) or the automatic creation of content (notifications and warnings) based on real examples, that inadvertently can present as potential loopholes for exploitation by fraudsters and organized crime.

AI allows the generation of realistic content, almost impossible to differentiate from the original, whether it is a web page (Phishing), a notification (SMShing), a call (Vishing), QR code (QRishing) or a video, and this makes all users potential targets for scams – especially those who were already vulnerable because of their less sophisticated understanding of technology or because they are unaware of existing threats.

Without making large investments, fraudsters are seeing that they have better tools at their disposal every day and are not hesitating to use them. How does this new scenario impact financial institutions and their customers?

We are seeing how the use of AI by fraudsters translates into increased effectiveness in social engineering techniques where for example customers of financial institutions are persuaded into making monetary transactions.

These scenarios can vary from a scam for a promise of investment in cryptocurrencies (Pig Butchering) or other assets with a high return, a romance scam where a person gains enough emotional trust to create a situation where they urgently request an amount of money, or confidence scam where a third party posing as a family member or known person in distress to get a money transfer.

On other occasions, the payer does not suffer direct losses, and may even be able to obtain a certain economic benefit, without being aware that they have been tricked into becoming a mule account and carrying out actions related to money laundering.

These scenarios are very difficult to detect, as it is the customer who initiates and authorizes the operation, and it is here where the ability of technology to identify these anomalous scenarios makes the difference.

More often we find cases that show that, despite the payer being the person who carries out the transaction or transactions associated with a case of scam or fraud, the entity to which he/she belongs could have detected an activity that does not correspond to the usual one, and this is when justice in some cases sides with the customer.

Regulatory entities, aware of the rise of these scam cases and the impact it has on consumers, are establishing measures to protect them. For this reason, financial institutions are beginning to implement technology and strategies to identify and prevent these types of cases.

In addition, we are seeing the media and reputational repercussions that these cases can have, both because of the empathetic component, the vulnerable profile that victims often show, and the economic relevance of this type of crime.

Artificial Intelligence and Scams versus Financial Institutions & Artificial Intelligence in the face of organized crime equipping itself with AI-based techniques to take advantage of their target’s vulnerability. It is time to respond with skilled teams that rely on technologies that have long been using AI to identify and prevent known and new modus operandi that fraudsters use to induce scams.

At Featurespace, we have spent years developing our technology to make the world a safer place to transact, knowing that it must also be geared to detect previously unseen scenarios, and that is why it offers great performance against new threats such as scams where fraudsters rely on AI techniques to increase their effectiveness and profitability.

We have recently produced a comprehensive Scam guide for identification and prevention, providing a detailed overview of this type of crime. We are currently collecting and analyzing data from Latin America to also provide information on scams in the region.

Additional challenges

In addition to the challenge of detecting and preventing cases of scam with the complexity it entails, there are also cases of first party fraud that are expected to increase both because of the economic situation we are going through and the growing regulatory pressure in favor of customers. Both contexts are traditionally exploited by fraudsters, whether they are groups operating in an organized manner or individuals operating on an ad hoc basis.

The greatest difficulty arises when it comes to differentiating between real scams and simulated scams. It is here again where effective technology in the detection of behavioral anomalies augmented with the necessary signals, must be combined with prevention strategies that are implemented based on the knowledge of the products and the profile of the customers.

But there is plenty of scope for the latter, which will be discussed in subsequent articles.

Share